Symmetry | Free Full-Text | An Empirical Comparative Assessment of Inter-Rater Agreement of Binary Outcomes and Multiple Raters

Agree or Disagree? A Demonstration of An Alternative Statistic to Cohen's Kappa for Measuring the Extent and Reliability of Ag

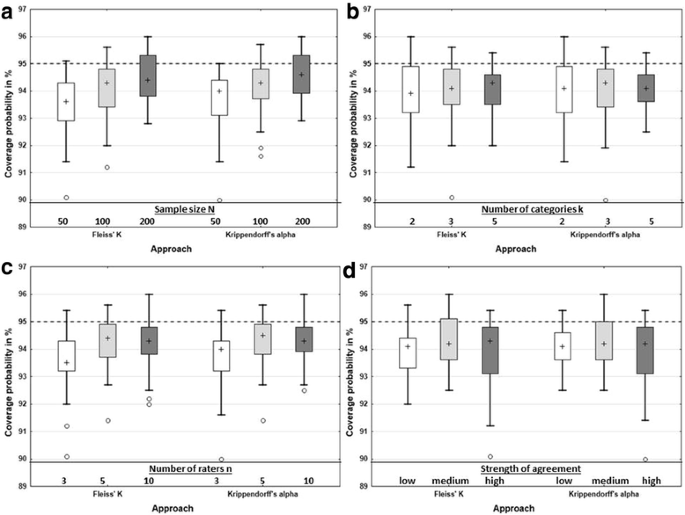

Measuring inter-rater reliability for nominal data – which coefficients and confidence intervals are appropriate? | BMC Medical Research Methodology | Full Text

PDF) Measuring inter-rater reliability for nominal data - Which coefficients and confidence intervals are appropriate?

Measuring inter-rater reliability for nominal data – which coefficients and confidence intervals are appropriate? | BMC Medical Research Methodology | Full Text

Interrater agreement statistics with skewed data: evaluation of alternatives to Cohen's kappa. | Semantic Scholar

Agree or Disagree? A Demonstration of An Alternative Statistic to Cohen's Kappa for Measuring the Extent and Reliability of Ag